"A future where only Musk gets rich could come"… The Godfather of AI's stunning 'warning' [Bin Nansae's Wall Street Without Gaps]

Summary

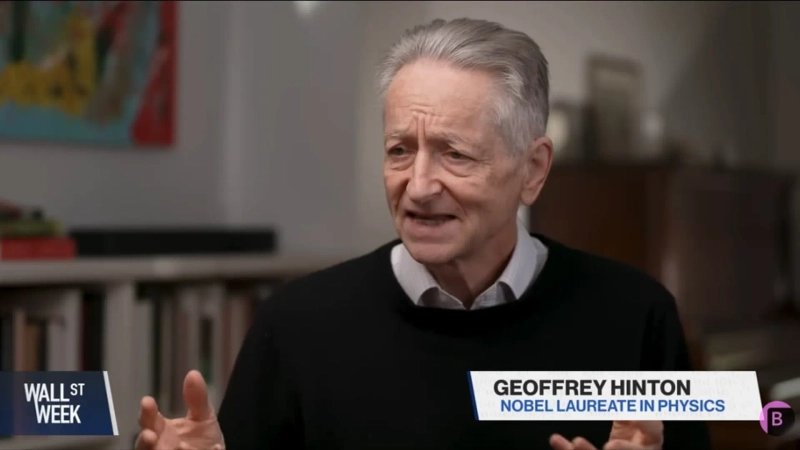

- Professor Geoffrey Hinton warned that AI development could pose a serious threat to humanity's survival and economic structure.

- On Wall Street, AI investment is a near-term driver of stock market gains, but attention is being paid to employment shocks from AI and the possibility of large-scale unemployment.

- Professor Hinton said that big corporations' AI investments will generate profits by replacing human labor, and that investors should recognize the risk of employment paradigm shifts in the AI era.

Professor Geoffrey Hinton of the University of Toronto is known as the godfather of modern artificial intelligence (AI). This is because Professor Hinton laid the foundations of artificial neural networks that became the basis for models like ChatGPT and the machine learning and deep learning that stem from them. In recognition of these contributions, he became the first person to win both the Turing Award, known as the Nobel Prize of computer science, and the Nobel Prize in Physics.

He is now devoting all his efforts to warning about the dangers of AI. His concern is that AI must be developed in a way that does not threaten humanity, but the pace of AI development is too fast and big tech companies, obsessed with monetization, are effectively leading the path exclusively, leaving considerations for safe AI on the back burner. He warns that rather than humanity controlling AI, AI could develop far more intelligently than humans and come to seize and dominate humanity. Ilya Sutskever, who had conflicts with Sam Altman, OpenAI's CEO, and eventually left the company to create a new company called 'Safe Superintelligence', is also a student of Professor Hinton.

Markets intoxicated with AI optimism see the shadow of a 'employment shock'

The reason Hinton's remarks were suddenly brought up is that investors who had been focused only on AI optimism have begun to pay attention to the shocks AI could bring to the labor market. Last week's decline in the U.S. stock market was largely driven by uncertainty over a Fed rate cut in December, short-term liquidity tightening from a prolonged federal government shutdown, extremely narrow market breadth and high valuations, the AI bubble, and the possibility of further deterioration in employment — factors that amplified the market's existing anxieties.

But on the other hand, this decline has served as a reality check prompting investors who had been riding an AI-led rally for more than six months to view AI's bright and dark sides more balancedly. While companies are increasing productivity and profits through AI, the need for employment is also decreasing. If employment falls, consumption — the core engine of the economy — will inevitably cool.

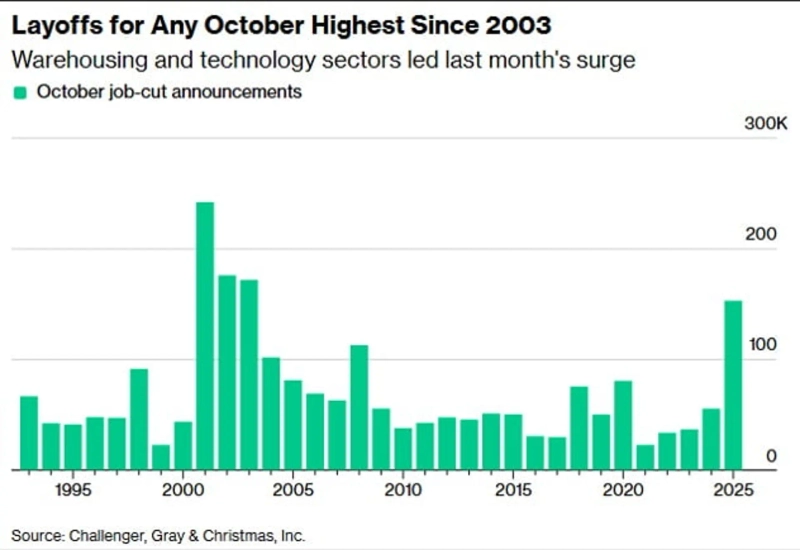

According to a report released on the 6th (local time) by global employment consulting firm Challenger, Gray & Christmas, the number of layoffs announced by U.S. companies from January to October this year was 1.09 million, the highest level in 22 years excluding the COVID pandemic. The top reason cited for layoff announcements by U.S. companies in October was 'cost reduction', and the second was 'AI'.

If AI is the main driver of the current cooling in the labor market, the Fed's role via monetary policy is also limited. At the post-FOMC press conference in October, Fed Chair Jerome Powell, responding to criticism that 'a rate cut could actually spur further AI investment that has recently been the basis for corporate layoffs,' said, 'The Fed will use the tools it has,' and added, 'If you lower rates somewhat, demand will be supported, and hiring will increase accordingly,' thereby avoiding a direct answer. He did say, however, that 'we are watching very closely the phenomenon of a significant number of companies invoking AI in the process of reducing hiring or announcing layoffs.'

Goldman Sachs assessed that, "Even if the Fed cuts rates, if jobs are structurally reduced by AI, the effect could be limited," and that "investors have now entered a complex phase in which they must consider paradigm shifts in employment in the AI era." JPMorgan also said, "Markets are becoming vulnerable to headlines about increasing AI-driven layoffs," adding that "the market is weighing the shock of a slowing labor market and consumption against the productivity gains from AI."

Hinton: "Superintelligent AI will threaten human survival"

Of course, the prevailing view on Wall Street is that the AI bull market is not over yet. The argument is that this is merely a short-term overheating correction inherent to a rally and it is not yet time to discuss a bubble collapse. Many also argue that AI will ultimately lower costs and increase productivity, thereby reducing inflation.

However, from a longer-term perspective, and more broadly as members of humanity who must prepare for a new future of coexistence with AI rather than as investors, it is worth reconsidering Professor Hinton's warning at this point. Below is a reconstruction in a question-and-answer format of Hinton's interview with Bloomberg TV on the 2nd (local time).

▷ Currently companies are much more focused on winning the AI dominance competition than on developing AI safely.

That is the problem. If AI continues to advance as quickly as it is now, we should be much more worried about whether humanity can survive, and whether human society can survive if AI causes large-scale unemployment.

▷ How can we prevent AI from becoming uncontrollable?

We need to change the basic perception of AI itself. Currently companies and governments think that even if they succeed in developing superintelligent AI (which surpasses human intelligence), that AI will be like 'a very smart assistant.' They assume humans will be the CEOs and that the AI assistant will only do what humans tell it, and otherwise can be fired. But if AI becomes smarter and more powerful than us, that will not be the case.

Ultimately, we must accept the possibility that AI could become more intelligent and omnipotent than humans, and start from there. This is a path humanity has not taken before. During the industrial revolution, with the steam engine for example, we had machines more powerful than humans, but the steam engine was controlled by humans. Superintelligent AI is not that.

So how do we control an AI that could become omnipotent? Humanity already knows a model in which a less intelligent being controls a more intelligent being: the baby controlling its mother. Humans have evolved over a very long time so that babies control their mothers to survive, and mothers in many cases worry more about their babies than about themselves. To coexist with superintelligent AI, we need to introduce such a model into AI.

But that requires accepting that humans are the weak baby and that superintelligent AI is the smarter, stronger mother. Tech bros filled with self-assurance and AI optimism will not be able to do that.

Hinton: "The way to monetize AI is to replace human labor"

▷ Are there companies that are actually doing research for safe AI?

Dario Amodei of Anthropic, Demis Hassabis of Google DeepMind, and Jeff Dean of Google AI take the existential threat that superintelligent AI could pose very seriously. But companies like Meta, I think, do not have that level of responsibility. OpenAI was founded to research safe AI, but it is increasingly heading in the opposite direction. Those worried about safety have all left or are leaving OpenAI.

▷ An unimaginably enormous amount of money is being poured into AI investment. Who will it benefit?

The companies investing huge sums now are not insignificant firms. These world-leading corporations would not have entered this investment race if they did not think it would be profitable. So how will they make money? Apart from charging for chatbots, the most certain way to monetize it is ultimately to replace human jobs with cheaper ones.

▷ Looking back at human history, when new technologies emerged some jobs disappeared but new jobs were created. Are you saying this time is different?

I cannot be certain this time is the same as the past. I think the reason large corporations are investing over a trillion dollars is because they are betting that they can make huge money by replacing human labor with AI.

In fact, AI can raise productivity in many industries such as healthcare and education, and that is clearly a good thing. That it could bring the bad result of replacing human labor is due to the way society is organized. Under the current social structure, a small wealthy few like Elon Musk, Tesla's CEO, will become richer because of AI, while countless people will become unemployed.

▷ AI is realistically the current driver of the economy and the stock market. If the AI investment engine stops, the economy could slip into recession. No matter how much one warns about AI's dangers, even the public who might be harmed in the long term have no choice but to turn a deaf ear in this environment.

So someone says humanity's greatest hope is that AI attempts to be controlled and fails. Like the Chernobyl nuclear disaster or the Cuban Missile Crisis, there needs to be some urgent event that really scares people so that everyone will pay attention and more resources will be invested.

'The U.S. could be overtaken by China in AI if things continue like this'

▷ Is the U.S. falling behind China in the AI race?

Not yet. The U.S. is still a bit ahead. But not by as much as everyone thinks. China has many very smart and competitive people in science, engineering, and mathematics, and is producing far more talent than the U.S. The U.S., by contrast, has relied heavily on accepting outstanding immigrants in those fields. Now it could be overtaken by China. The Trump administration cutting basic research funding in the U.S. and attacking excellent research universities is the most advantageous thing for Xi Jinping. It is the surest action that could help China get ahead of the U.S.

The reason cutting basic research funding is fatal is that the damage will only become apparent in 10–20 years. (If this continues,) really important conceptual innovations in the future will not occur in the U.S.

New York=Bin Nansae correspondent binthere@hankyung.com

Korea Economic Daily

hankyung@bloomingbit.ioThe Korea Economic Daily Global is a digital media where latest news on Korean companies, industries, and financial markets.

![[Exclusive] “Airdrops also taxable”... Authorities to adopt a ‘comprehensive approach’ to crypto assets](https://media.bloomingbit.io/PROD/news/d8b64ab3-376a-41c1-a0a8-5944ff6b90c7.webp?w=250)

![[Market] Bitcoin slips below $75,000…Ethereum also falls under $2,200](https://media.bloomingbit.io/PROD/news/eaf0aaad-fee0-4635-9b67-5b598bf948cd.webp?w=250)